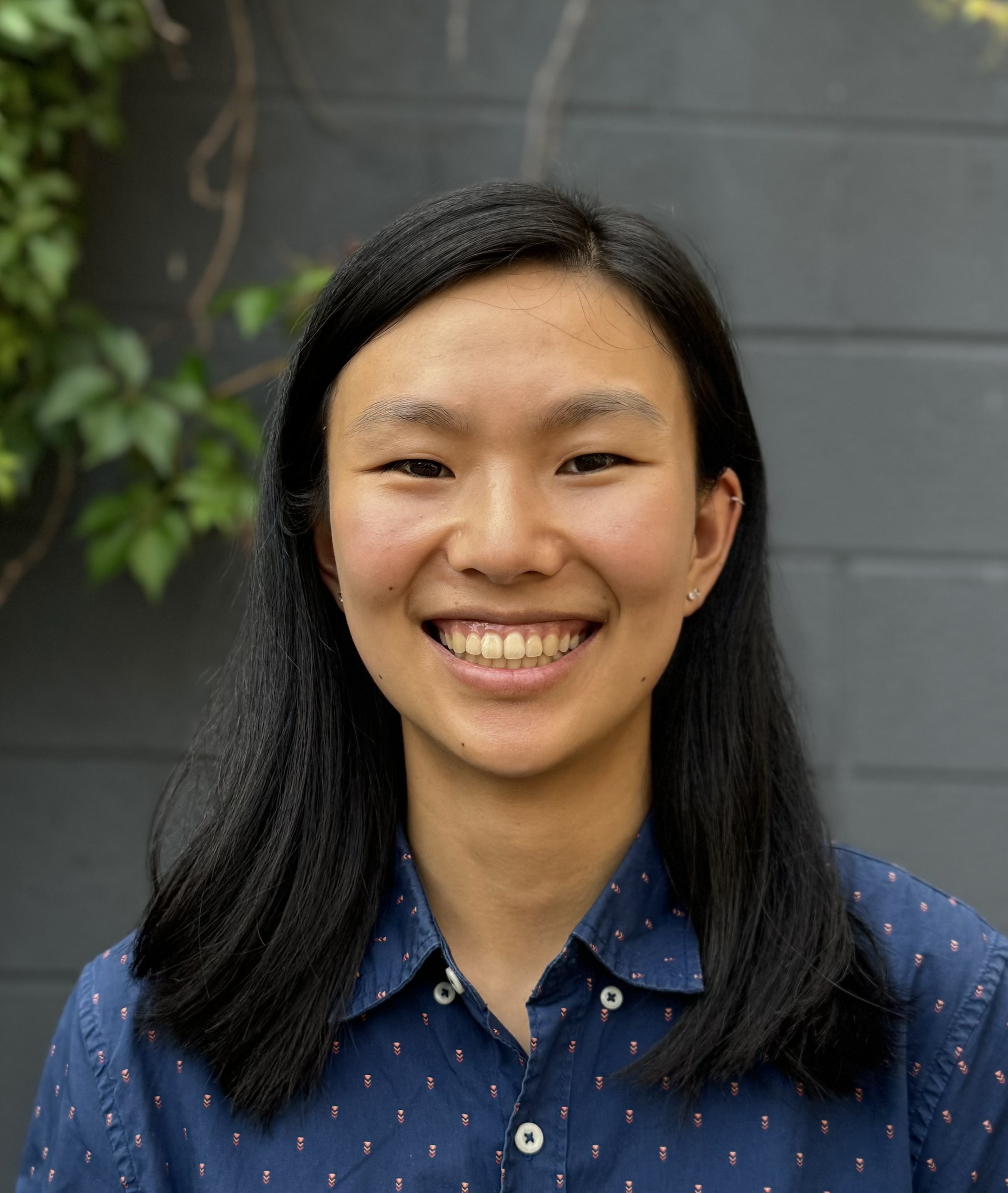

I am an Assistant Professor at Cornell Tech and in the Department of Information Science at Cornell University, as well as a field faculty member in Computer Science and Data Science.

I am currently recruiting both PhD students and postdocs. If you are an interested PhD student, please apply directly to either the Cornell Information Science or Cornell Computer Science PhD programs and list my name on your application. Read this for more information.

My research is on responsible AI. My research statement (Spring 2024). Some themes I am interested in include:

We expand the frame of technical approaches which often oversimplify social concepts through mathematically convenient but harmful abstractions, e.g., treating intersectionality as a problem of simply multiple groups (FAccT 2022), operationalizing fairness by treating all groups the same (ACL 2025 best paper), and treating racism and sexism as symmetrical forms of oppression (FAccT 2025). In doing so, we keep practical constraints in mind to propose tractable fine-tuning interventions (ICCV 2023 oral) and bias measurements (PNAS 2025).AI fairness

(FAccT 2022,

ICCV 2023

oral,

PNAS 2025,

FAccT 2025,

ACL 2025

best paper):

How can we move beyond one-size-fits-all, mathematically convenient notions of fairness while still being tractable?

Evaluation (ICML 2021, Patterns 2024, ICML Position 2025, Preprint 2025): How can we measure multi-faceted constructs in generative AI, like reasoning and fairness, and grapple with the trade-offs between incommensurate values?

Benchmarks and leaderboards shape the norms and goals of a field, and what we choose to measure sets our priorities (ICML 2021). Instead of relying on single numbers from leaderboards to capture abstract constructs on open-ended generative models such as reasoning and fairness (Patterns 2024), we should use multi-faceted measurements and a more rigorous lens of validity and measurement theory (ICML Position 2025, Preprint 2025)

Societal impacts of AI (JRC 2023, Nature Machine Intelligence 2025): What are the effects of AI on aspects of humanity such as our information ecosystem and power asymmetries?

As AI is increasingly deployed, some common use cases such as predicting future outcomes about individuals (JRC 2023) and simulating human participants with LLMs (Nature Machine Intelligence 2025) pose distinct normative concerns. I am especially interested in thinking about AI as an epistemic technology and its effects on the information ecosystem (in submission).

My work has been covered by outlets like MIT Technology Review, Vice, Washington Post, New Scientist, and Tech Brew.

Previously, I was a postdoc at Stanford University HAI, earned my PhD in Computer Science at Princeton University advised by the wonderful Olga Russakovsky, and received a BS in Electrical Engineering and Computer Science from UC Berkeley. I've received the NSF GRFP, EECS Rising Stars, Siebel Scholarship, Microsoft AI & Society Fellowship, and have interned at Microsoft Research and Arthur AI.

An FAQ about algorithmic fairness.