A lot more people in the ML and broader research community have been thinking about issues of algorithmic fairness and societal impact. I’ve compiled here a set of frequently asked questions that often come up when folks from primarily technical research backgrounds start thinking about this space. This is a living document, please provide any feedback here.

+ What is algorithmic fairness and why is it important?

Algorithmic fairness broadly refers to the disparate impact that algorithms can have towards different demographic groups, e.g., Black people, women, Black women. This is an important problem because technology has disproportionately benefited the more privileged over the less privileged, exacerbating societal inequalities. We need to ensure that the advances we build today do not continue that trend as AI and algorithms become more prevalent in real-world settings.

For example, in the American criminal justice system, an algorithmic risk prediction tool had higher false positives for Black people than White people. In the healthcare setting, Black patients assigned the same risk score for triage prioritization as White patients were found to actually be sicker. In job hiring, automated screening tools downranked resumes that contained the word “women,” like in “women’s tennis team.”

Overall, fairness work can employ a broad range of methods and topics. From quantitative work such as constrained optimization problems and deep learning, to more qualitative work such as user studies and policy analyses. ACM FAccT and AAAI/ACM AIES are two of the prominent top-tier fairness conference venues. As some examples of what algorithmic fairness research looks like, the following papers won the best paper awards from FAccT 2024:

For example, in the American criminal justice system, an algorithmic risk prediction tool had higher false positives for Black people than White people. In the healthcare setting, Black patients assigned the same risk score for triage prioritization as White patients were found to actually be sicker. In job hiring, automated screening tools downranked resumes that contained the word “women,” like in “women’s tennis team.”

Overall, fairness work can employ a broad range of methods and topics. From quantitative work such as constrained optimization problems and deep learning, to more qualitative work such as user studies and policy analyses. ACM FAccT and AAAI/ACM AIES are two of the prominent top-tier fairness conference venues. As some examples of what algorithmic fairness research looks like, the following papers won the best paper awards from FAccT 2024:

- Algorithmic Pluralism: A Structural Approach To Equal Opportunity

- Auditing Work: Exploring the New York City algorithmic bias audit regime

- Learning about Responsible AI On-The-Job: Learning Pathways, Orientations, and Aspirations

- Real Risks of Fake Data: Synthetic Data, Diversity-Washing and Consent Circumvention

- Akal Badi ya Bias: An Exploratory Study of Gender Bias in Hindi Language Technology

- Recommend Me? Designing Fairness Metrics with Providers

+ I just do computer science/theory/technical work, how is this relevant to me?

If you believe your research has any real-world impact, then it inherently carries the potential for social impact. Even the most theoretical science generally claims some degree of relevance to the real world, and much of technology is inherently dual-use—it can lead to outcomes that are beneficial, harmful, or somewhere in between.

For example, if you are working with facial datasets like CelebA, it’s worth considering the potential surveillance applications of computer vision. If you are working on object recognition, whether those systems will perform well on the objects of countries which may look different from those in the USA or Western Europe. If you are working on image captioning, whether the captioning systems trained on captions generated by sighted users (COCO dataset) will generalize to the different kinds of captions desired by blind users (VizWiz dataset). If you are working on motion capture systems, how incorrect assumptions about bodies fail to generalize across diverse body types. And if you are working with any labeled dataset, where that dataset came from and whether participants provided consent or received proper compensation (e.g., participants have previously reviewed distributing content for two to three dollars an hour).

While it may be easy to justify your own work as simply research, and these issues as those that arise during application, research findings have an impact on deployment practices. For instance, the best image captioning model according to research practice may be taken as the base model for a deployed application. However, this model may still do poorly when used as an assistive technology for blind and low vision users.

That being said, of course not every ML work needs to be fairness work. However, possible fairness implications are important to be aware of, and if significant enough, described as a broader impact or limitations section. This can ensure that technology is developed ethically from the start. One useful analogy here is to security by design (i.e., security built in foundationally from the start) compared to bolt-on security, which is less effective. Another useful analogy is to technical debt, where there will be time-consuming and expensive future consequences to taking shortcuts and not doing sufficient testing now. Ethical debt occurs when we prioritize values now that will lead to eventual ethical consequences.

For example, if you are working with facial datasets like CelebA, it’s worth considering the potential surveillance applications of computer vision. If you are working on object recognition, whether those systems will perform well on the objects of countries which may look different from those in the USA or Western Europe. If you are working on image captioning, whether the captioning systems trained on captions generated by sighted users (COCO dataset) will generalize to the different kinds of captions desired by blind users (VizWiz dataset). If you are working on motion capture systems, how incorrect assumptions about bodies fail to generalize across diverse body types. And if you are working with any labeled dataset, where that dataset came from and whether participants provided consent or received proper compensation (e.g., participants have previously reviewed distributing content for two to three dollars an hour).

While it may be easy to justify your own work as simply research, and these issues as those that arise during application, research findings have an impact on deployment practices. For instance, the best image captioning model according to research practice may be taken as the base model for a deployed application. However, this model may still do poorly when used as an assistive technology for blind and low vision users.

That being said, of course not every ML work needs to be fairness work. However, possible fairness implications are important to be aware of, and if significant enough, described as a broader impact or limitations section. This can ensure that technology is developed ethically from the start. One useful analogy here is to security by design (i.e., security built in foundationally from the start) compared to bolt-on security, which is less effective. Another useful analogy is to technical debt, where there will be time-consuming and expensive future consequences to taking shortcuts and not doing sufficient testing now. Ethical debt occurs when we prioritize values now that will lead to eventual ethical consequences.

+ What equation is best to measure fairness? And can we optimize for it?

There is no single measure for fairness (sorry!), only measurements that capture different facets of this complex construct. As an example, consider how a bank should distribute a loan. Is it fair if women and men receive the same number of loans? What if more men applied for loans than women? What if more qualified men applied for loans than qualified women? What if women are only applying for less loans because historically they were not allowed to and have been socialized to seek out life paths which require less loans? How can we consider the emotional costs incurred when someone is rejected for a loan they need to pay urgently due rent? And what about non-binary people? No single measure can capture all of this nuance, and one certainly cannot when you consider all of the domains that AI might be used in, e.g., healthcare, hiring.

Added on to this difficulty of measurement is that machine learning outputs are rarely used directly. Generally, the output is used to assist a human decision-maker, so even knowing the error rate of the model is insufficient, since humans act with discretion on top of the data (when e.g., hiring, policing). This makes it hard to optimize models at just the model-level, without engaging with how the model will be used in practice. Ultimately, fairness is a sociotechnical problem, and no technology-only intervention can solve it.

The above were only examples about predictive AI (i.e., forecasting future outcomes). Generative AI (i.e., creating novel content) that has multi-modal outputs can be more complicated because of its open-ended outputs, and can lead to different manifestations of fairness-related harms, such as stereotyping. Thinking about fairness as a simple equation or quota can not only be overly simplistic but also lead to undesirable outcomes, such as racially diverse Nazis.

This may feel unsatisfying, and for those used to thinking about problems as having an "objective" answer, it can be uncomfortable to sit with ambiguity. However, a big part of fairness research is embracing this ambiguity and partiality in the science, and making progress nonetheless. Because while there is no definite way to achieve total fairness, there are still ways to characterize one model (e.g., which grants only men loans) as less fair than another (e.g., which grants comparable proportions of people from each gender loans).

Added on to this difficulty of measurement is that machine learning outputs are rarely used directly. Generally, the output is used to assist a human decision-maker, so even knowing the error rate of the model is insufficient, since humans act with discretion on top of the data (when e.g., hiring, policing). This makes it hard to optimize models at just the model-level, without engaging with how the model will be used in practice. Ultimately, fairness is a sociotechnical problem, and no technology-only intervention can solve it.

The above were only examples about predictive AI (i.e., forecasting future outcomes). Generative AI (i.e., creating novel content) that has multi-modal outputs can be more complicated because of its open-ended outputs, and can lead to different manifestations of fairness-related harms, such as stereotyping. Thinking about fairness as a simple equation or quota can not only be overly simplistic but also lead to undesirable outcomes, such as racially diverse Nazis.

This may feel unsatisfying, and for those used to thinking about problems as having an "objective" answer, it can be uncomfortable to sit with ambiguity. However, a big part of fairness research is embracing this ambiguity and partiality in the science, and making progress nonetheless. Because while there is no definite way to achieve total fairness, there are still ways to characterize one model (e.g., which grants only men loans) as less fair than another (e.g., which grants comparable proportions of people from each gender loans).

+ What’s with the fairness impossibility theorems?

The fairness impossibility theorems (Chouldechova 2016, Kleinberg et al. 2016) demonstrate that in most real-world settings of binary classification, it is impossible to satisfy two reasonable measures of fairness (e.g., equal error rates across groups and calibrated predictions for each group) simultaneously. Some have pointed to these results as evidence that no matter what we do, fairness cannot be achieved. However, that mistakes each of the equations implicated in the impossibility theorems for a full notion of fairness: "What is strictly impossible here is the perfect balance of three specific group-based statistical measures. Labeling a particular incompatibility of statistics as an impossibility of fairness generally is mistaking the map for the territory" (Green and Hu 2018). Instead, this tells us that algorithmic fairness is a substantively hard sociotechnical problem, where we cannot rely on just the satisfaction of various statistical criterion.

+ When will we know that our model is sufficiently fair?

Fairness is not a static, or possible, ideal to be achieved. It will always involve trade-offs that must be continuously re-negotiated. Some have argued that we should start thinking about models as simply whether they are "fair enough," because there will always remain a reasonable fairness equation that is unsatisfied. However, we should not think about only needing algorithms to be “fair enough” as a lower threshold that allows us to put our hands up and give up on fairness. Instead, this means that we have to be even more careful in how we justify why we believe a particular model is fair enough for all of the stakeholders involved, and that enough alternatives were considered.

+ Isn’t model bias just a dataset bias problem though?

Imagine saying that "model accuracy is just a dataset quality problem." Of course model bias (and model accuracy) depend on dataset bias (and dataset quality), but there are many other factors at play, such as architecture, loss function, target variable. Beyond that, models can amplify bias beyond what is in the dataset.

Additionally, the narrative that model bias is just dataset bias is unproductive. It has often been employed by model trainers to punt the problem to a different part of the machine learning pipeline. But, there is no such thing as an unbiased dataset: even if you were to somehow collect every single image available in the world, it would still not be an unbiased look at the world because it would be mediated by what humans chose to take pictures of, and which humans were the ones taking the pictures. On top of that, the world itself is biased and we may not want to be replicating that bias with our models. So, a more productive way of thinking about the problem is taking a multi-faceted approach to not only reducing dataset bias, but also addressing all of the other stages in a machine learning life cycle.

Additionally, the narrative that model bias is just dataset bias is unproductive. It has often been employed by model trainers to punt the problem to a different part of the machine learning pipeline. But, there is no such thing as an unbiased dataset: even if you were to somehow collect every single image available in the world, it would still not be an unbiased look at the world because it would be mediated by what humans chose to take pictures of, and which humans were the ones taking the pictures. On top of that, the world itself is biased and we may not want to be replicating that bias with our models. So, a more productive way of thinking about the problem is taking a multi-faceted approach to not only reducing dataset bias, but also addressing all of the other stages in a machine learning life cycle.

+ Perhaps a fair model would be one that doesn’t use race, or gender, or any of those attributes?

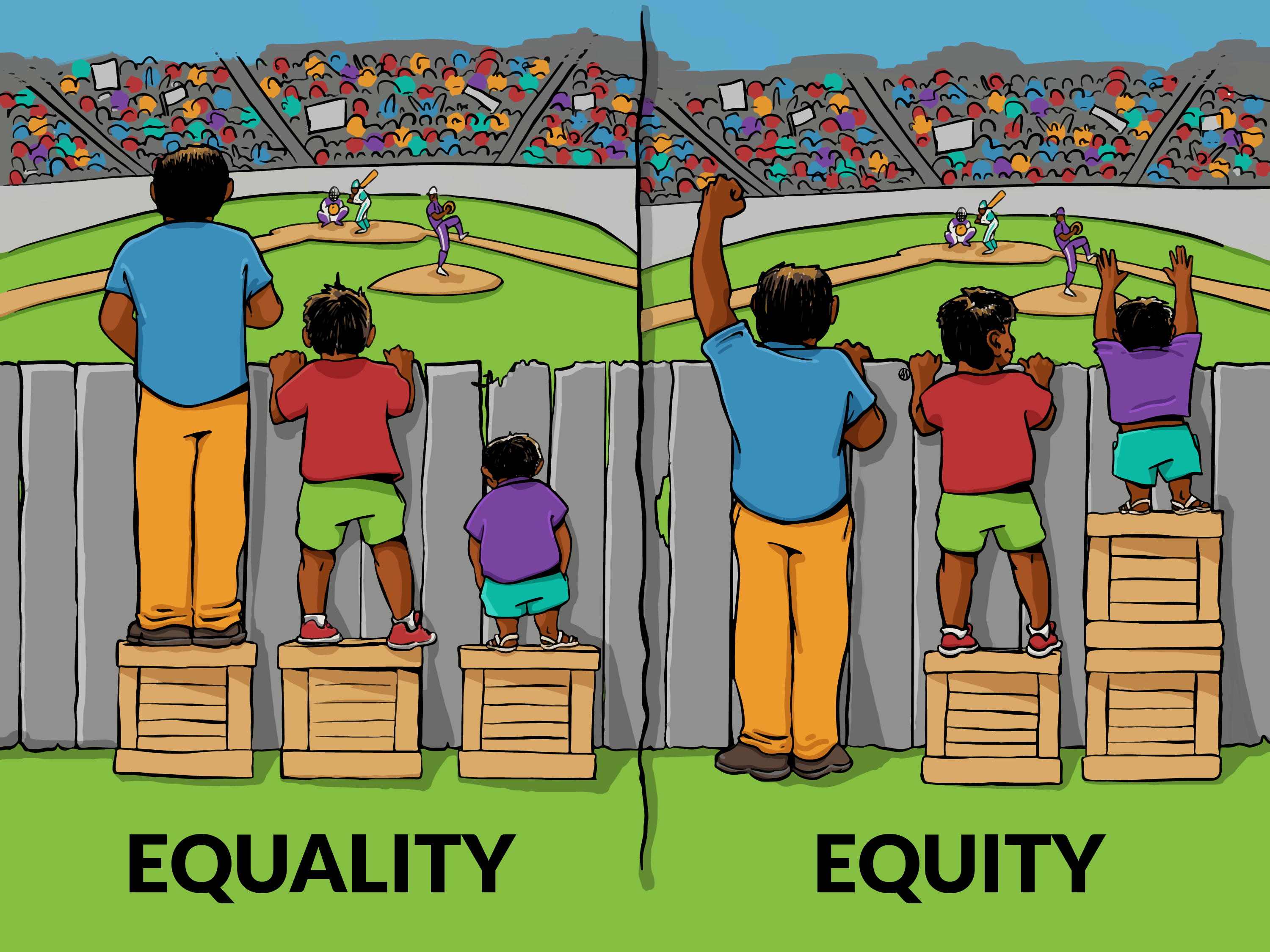

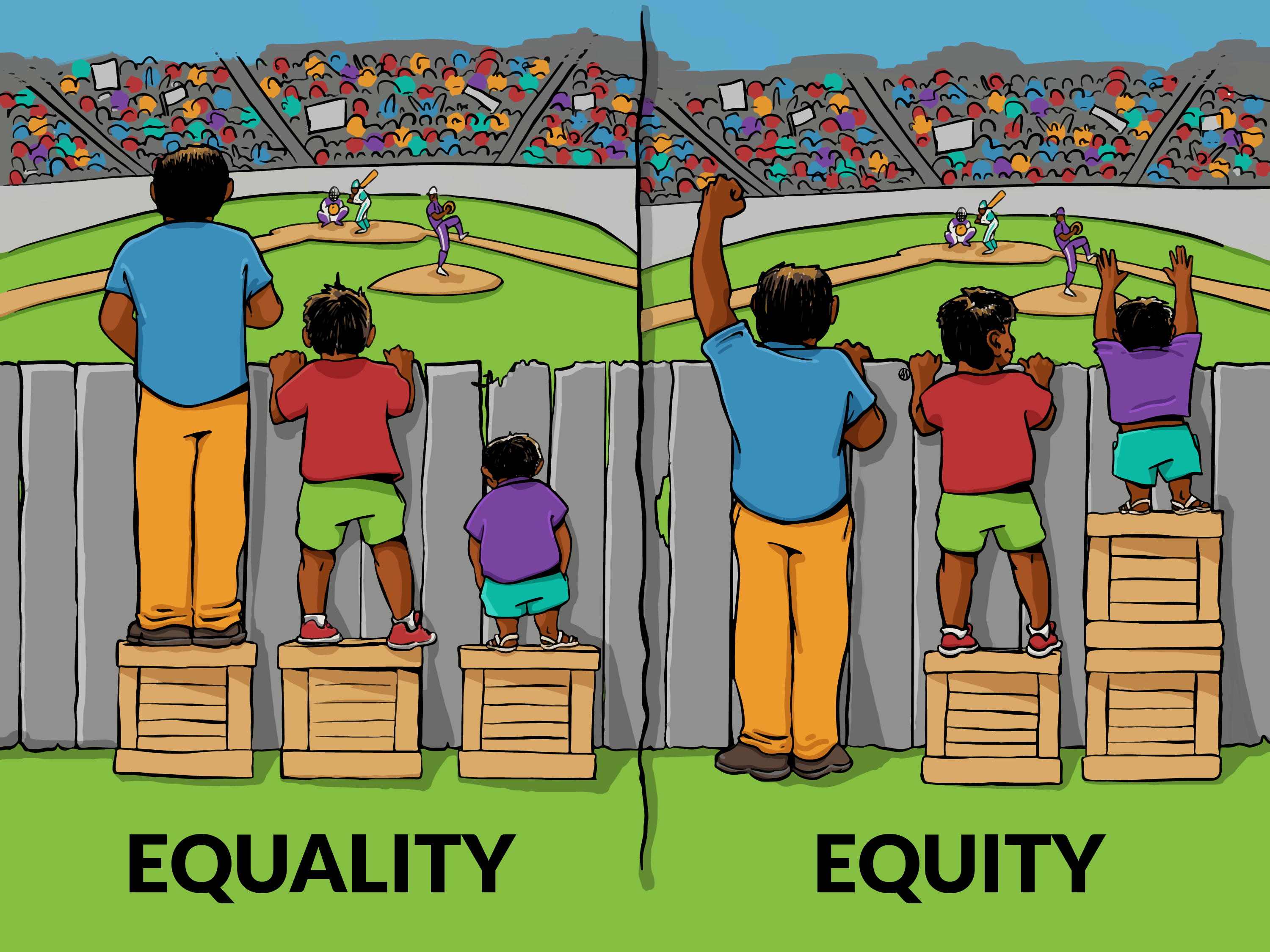

This is often called "fairness through blindness" in the community, contrasting with "fairness through awareness." Besides the fact that it is nearly impossible to remove all possible proxies for race from a model (e.g., even zip code as an input feature encodes a lot of information about race because of the racial segregation of neighborhoods in America), this is likely not what is desired. For example, we can imagine a person who claims they “do not see race.” While there might be good intentions behind such a statement, this fails to recognize that race, gender, and other attributes have very real impacts on individuals in our society (e.g., gender pay gap, racial discrimination through redlining), and a model which fails to acknowledge this is one which is operating in a social vacuum.

Image credit: Interaction Institute for Social Change | Artist: Angus Maguire

Image credit: Interaction Institute for Social Change | Artist: Angus Maguire

+ Since fairness is "sociotechnical," is it still computer science?

There is certainly a lot of fairness work that would not be considered computer science. But there is also a lot of fairness work that is computer science! In fact, computer science expertise is important in this space. It is needed to build and evaluate AI systems to ensure they align with our desires. Without computer science expertise, we would not have sound ways to understand the workings of computer systems to measure and mitigate their fairness impact. Measurements informed by CS expertise can interrogate the inner workings of ML models to better direct interventions, instead of only at the output stage. Research on spurious correlations, out-of-distribution classification, robustness, are all technical directions with implications for fairness.

A related computer science subfield is human-computer interaction, which has historically sometimes had trouble getting accepted by computer science departments as sufficiently "computer science." This can be at least partially attributed to the hierarchies of knowledge about what is valued as "difficult," "important," and "objective," knowledge.

A related computer science subfield is human-computer interaction, which has historically sometimes had trouble getting accepted by computer science departments as sufficiently "computer science." This can be at least partially attributed to the hierarchies of knowledge about what is valued as "difficult," "important," and "objective," knowledge.

+ But aren’t humans just as biased as machines? At least with machines we can better scrutinize them.

This is true in certain respects, but we have to also remember the downsides of machine decision-making compared to human decision-making. For one, with a human decision-maker, there is often the opportunity for discretion and judgment that can account for context (e.g., a traffic accident that caused someone to miss their appointment time) or errors (e.g., a typo on a name form). Of course, this discretion comes with its own risks of bias and inconsistencies. While algorithms can be designed to permit more leniency, in practice, they generally follow rigid rules without adequate intervention and contestability mechanisms. Second, there’s a form of automation bias where people believe that decisions from machines are both more accurate and more objective, regardless of whether that’s true. Third, human decision-makers can be held accountable for their actions and learn from their mistakes in a more dynamic way than current model updates. And a fourth reason is that ML systems scale in a dramatic way compared to human decision-makers. Compared to the impact a human decision-maker could make, one predictive algorithm in the Netherlands for detecting welfare fraud wrongly accused 26,000 parents of welfare fraud, driving families into debt. Another externality of this scale is how widespread the harms can be. While individual humans may be more or less biased in different ways such that the noise of one decision-maker (e.g., a hiring manager at Company A) can be different from the noise of another (e.g., a hiring manager at Company B), one biased ML model can be rapidly deployed across a wide range of settings and enact the same bias against the same individuals. These four factors as well as others make the calculus more complicated than the question initially suggests. Whether a human or machine or both in conjunction are better to be deployed in a particular application requires navigating many trade-offs and, as is a common refrain in fairness, “depends on the application.”

+ I’m a fairness researcher. These answers feel a bit simplistic.

Yes, this is only an introduction, and at times compromised on nuance for clarity—and to not sound *too* preachy. If you have any feedback, or examples you have felt better communicate these concepts, please submit them here!

+ Where can I learn more?

The following books are great places to start:

- Race After Technology by Ruha Benjamin

- Algorithms of Oppression by Safiya Noble

- Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor by Virginia Eubanks

- Weapons of Math Destruction by Cathy O'Neil

- Data Feminism by Catherine D'Ignazio and Lauren F. Klein (or the shorter Data Feminism for AI)

This is inspired by Sanmi Koyejo and Olga Russakovsky’s CVPR 2022 tutorial Algorithmic fairness: why it’s hard and why it’s interesting. That tutorial is also a fantastic place to learn more. I am grateful to feedback from Anastasia Koloskova, Sanmi Koyejo, Olga Russakovsky, Brent Yi. This FAQ was posted in January 2025.